STEM

How do you know if a Text is AI-Generated?

When the groundbreaking ChatGPT was launched at the end of last year, teachers worldwide quickly realized that it would pose new challenges. The artificial intelligence chatbot can write all sorts of texts with great precision and skill, making teachers worried that students would soon use the technology to write their assignments. So, in the future, how can teachers know if a student or a bot writes an essay?

Luckily, several new methods will allow teachers to spot the difference. This article provides a list of online tools that can detect artificial intelligence. To evaluate their effectiveness, we tested several tools to see if they could distinguish human- and AI-generated text.

Method

To test these new online tools, we relied on two texts. The first was written by ChatGPT and was 100% AI-generated and flawless. We wrote the second text ourselves, employing unusual syntax, slight punctuation mistakes and unnatural word choices to give it a human touch. Being somewhat flawed, this text served as an excellent counter-example. Here are the two texts we used:

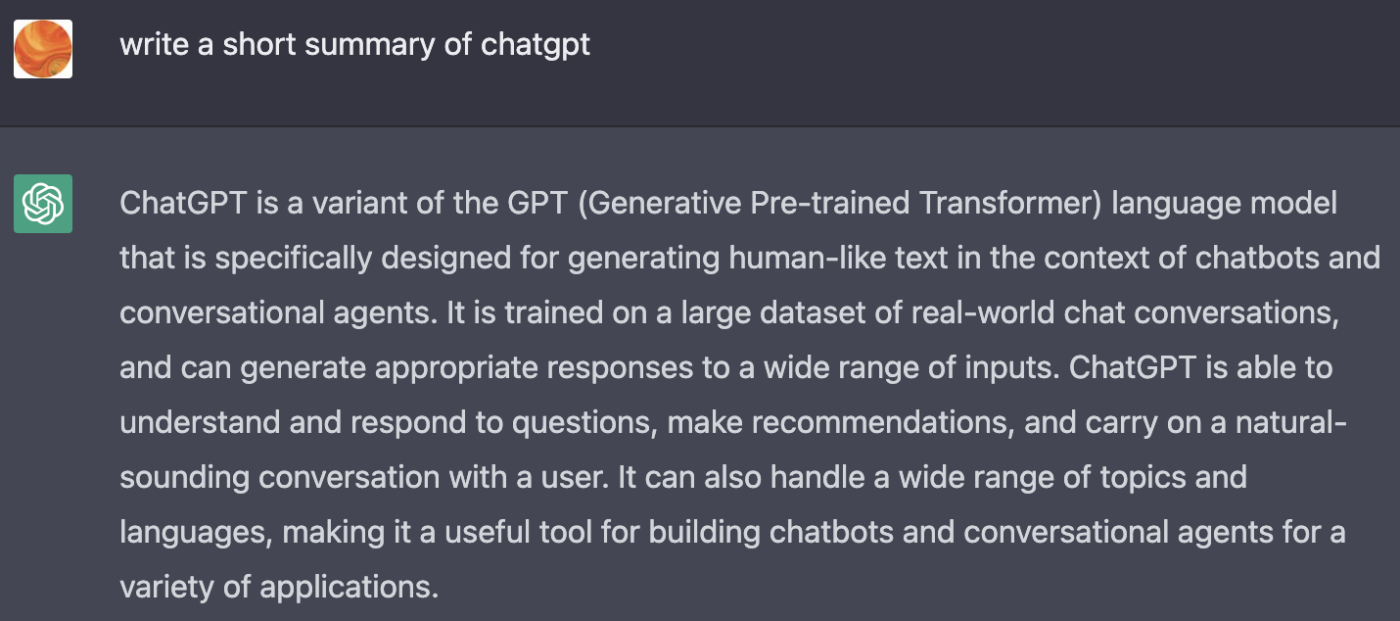

Text 1: Written by Artificial Intelligence

GhatGPT, 100% artificial intelligence, wrote this text.

Text 2: Written by us

This text was written by content writers at Scientist Factory, average intelligence.

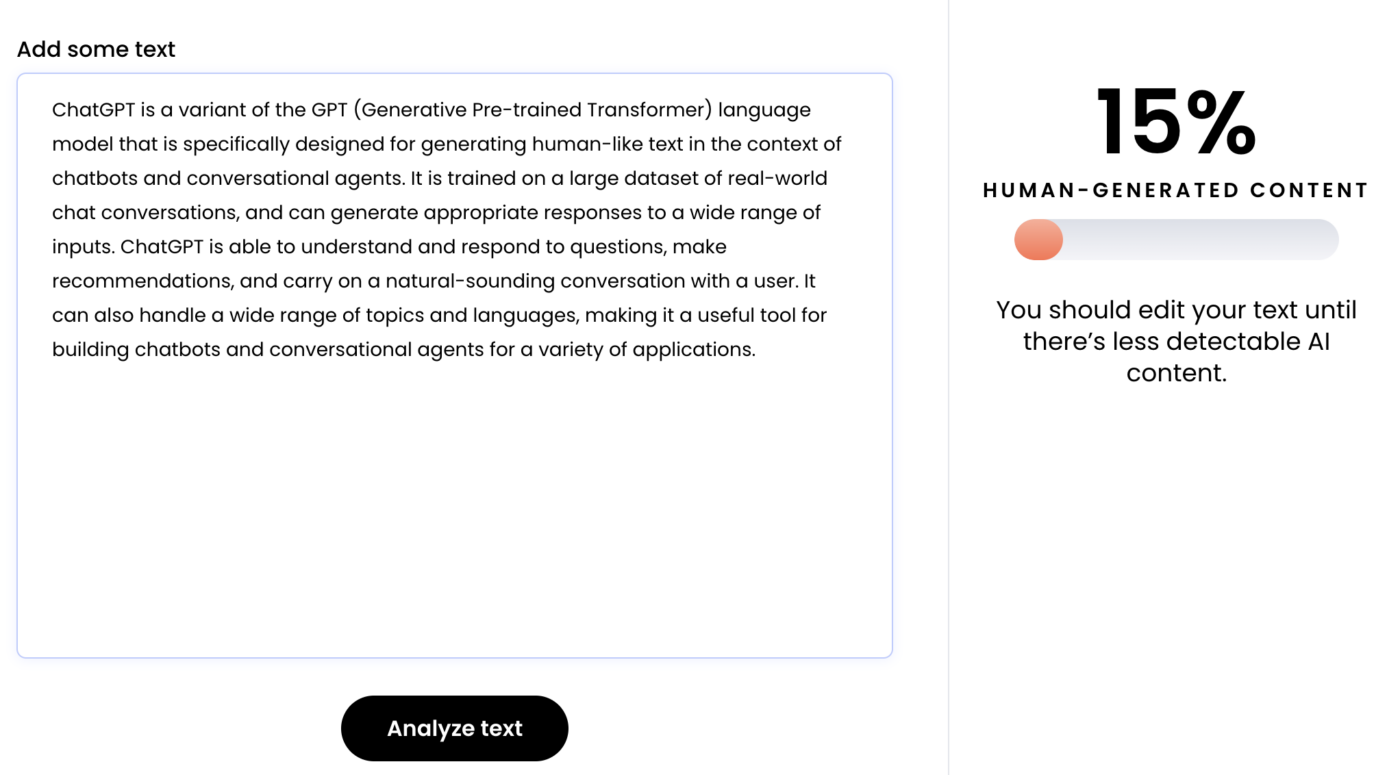

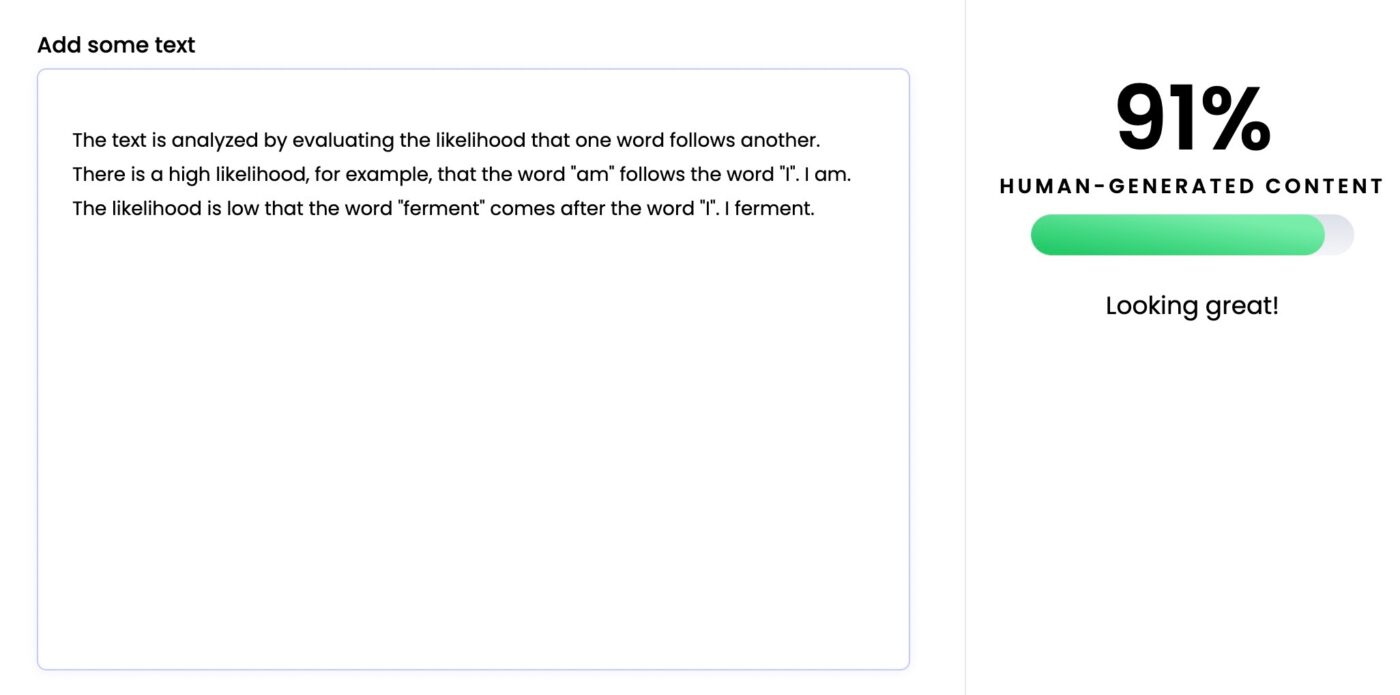

Writer.com’s AI detector

This site analyzes your text and calculates an estimated percentage of human-generated content. They do not provide any explanation as to how the tool works.

https://writer.com/ai-content-detector/

According to writer.com’s AI detector, only 15% of text 1 is written by a human.

This result will give you an indication but may not be enough to tell you with certainty.

The result is quite accurate when we test it for text 2, with an estimated 91% human-generated content.

Gltr.io

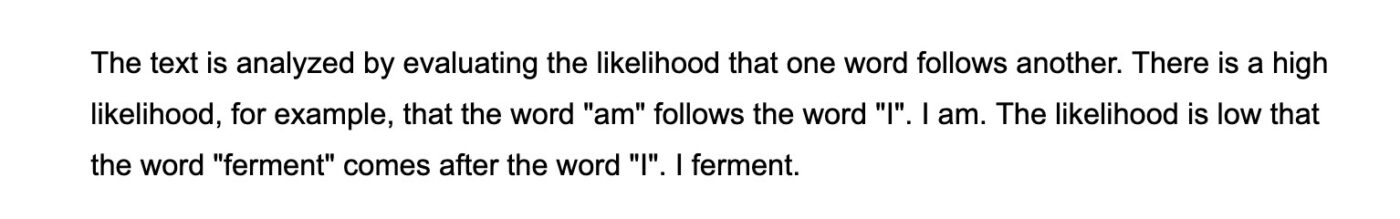

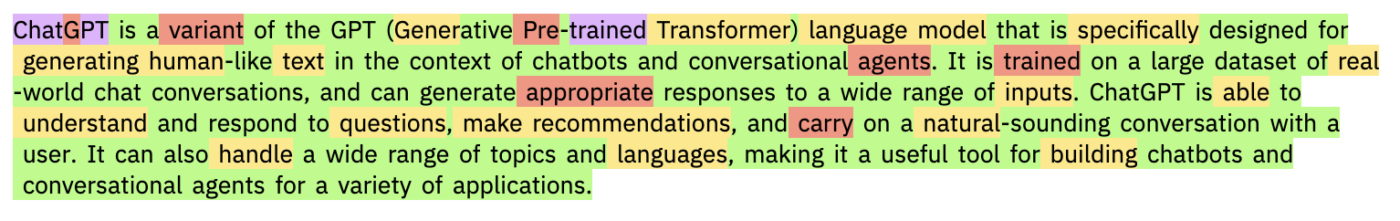

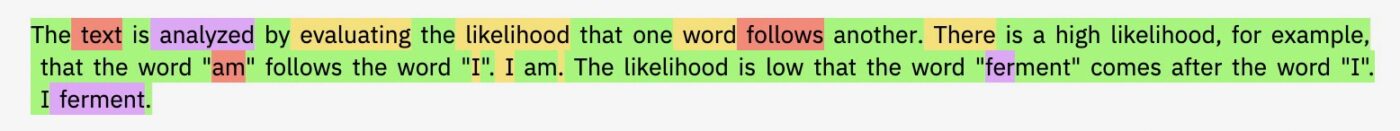

GLTR (Giant Language Model Test Room), pronounced “glitter,” is made by scientists from MIT and Harvard. The detector’s method is thoroughly explained on the website. The technology evaluates the likelihood that one word follows another. For example, there is a high likelihood that the word “am” follows the word “I.” I am. The probability is low that the word “ferment” follows “I.” I ferment.

http://gltr.io/dist/index.html

Words within the top 10 range of predicted words are marked as green. The top 100 are highlighted with yellow, the top 1000 are red and even less likely words are purple. A human-generated text should have fewer green and yellow words than an AI-generated text.

The text written by ChatGPT yielded these results:

A human-generated text should have more random word choices and irregular syntax, leading to more red and purple words. However, as seen from the example below, our text 2 had a similar proportion of green and yellow vs. purple and red as the example written by ChatGPT.

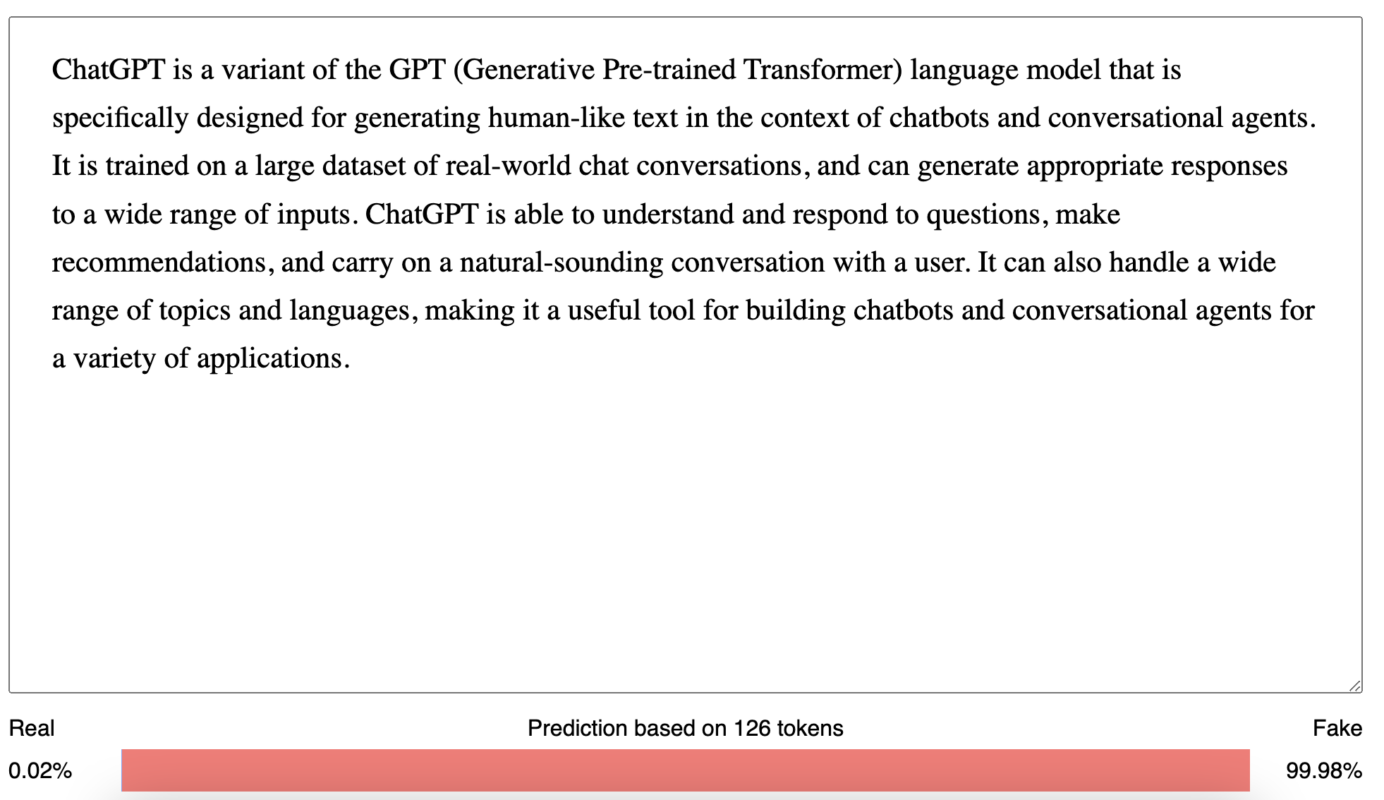

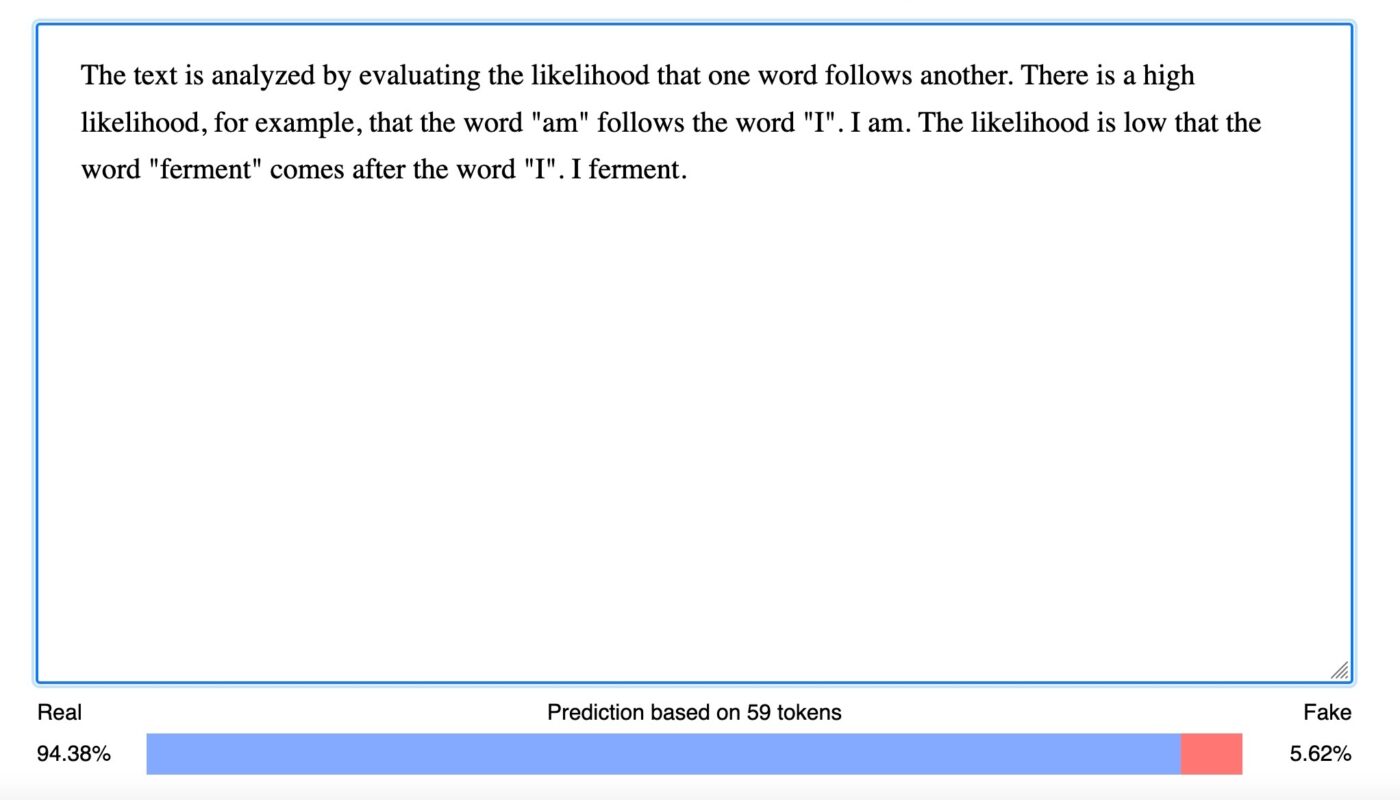

GPT-2 Output Detector Demo

Like writer.com’s technology, this tool calculates an estimated percentage of human-generated content. The method is not described, but the site claims that the detector’s accuracy is higher for longer texts.

https://huggingface.co/openai-detector/

When we tested this detector on the text written by ChatGPT, it found that AI wrote 99.98%.

When we tested it on the text we wrote, it predicted 94.38% human content.

Conclusion

There are many online tools available to evaluate whether a text is AI-generated. Teachers can use these tools to get a better idea of whether a text submitted by a student is written by the student or by AI. We can expect this technology to improve as AI, like ChatGPT, develops and becomes a more significant part of our lives.

Please share in the comments below if you know of any AI detectors we did not discuss in this article.